The pace of AI can make even a changelog feel out of breath. Tech Spotlights aims to slow the frame just enough to see what’s actually on the table: specific tools, platforms, frameworks, and the ideas shaping them. No hype, no takedowns-just clear, grounded looks at how things work, where they fit, and where they don’t.

Each feature examines substance over slogans. We look at design choices, supported workflows, integration paths, performance characteristics, resource costs, licensing, security and governance considerations, and the state of the surrounding community. When relevant, we include reproducible tests, limitations in plain language, and links to independent evaluations.

What you can expect from every spotlight: a concise overview; how it’s built and how it’s used; setup notes; a sample workflow; interoperability and deployment considerations; known pitfalls; viable alternatives; and a quick decision checklist to help you judge fit for purpose. Think of it as a field guide rather than a leaderboard-practical, comparable, and vendor‑agnostic.

Whether you’re selecting a stack, evaluating a pilot, or simply staying current, these spotlights aim to provide just enough light to make the next step deliberate.

Tool Capabilities Unpacked Evaluation methods privacy safeguards and integration paths

Turning raw feature lists into operational confidence starts with disciplined evaluation. Define a north star-clear, business-tied KPIs-and layer in multi-angle testing that blends offline rigor with live telemetry. Use golden sets curated by domain experts, adversarial suites that probe edge cases, and shadow deployments to watch behavior before impact. Report with model cards and eval cards that log datasets, metrics, and caveats, and track regression via versioned baselines. Balance quality, latency, and cost under realistic traffic shapes; slice results by cohort to surface fairness and robustness behaviors that aggregate metrics hide.

- Offline checks: task rubrics, F1/ROUGE/BLEU where appropriate, constraint satisfaction

- Human review: calibrated annotators with inter-rater reliability and rubric drift audits

- Safety stressors: red teaming, jailbreak probes, prompt collision tests

- Live trials: shadow mode, A/B with guardrails, feedback loops via structured tags

- Ops telemetry: latency percentiles, cost per successful outcome, failure taxonomies

Data protection and integration should be engineered, not implied. Enforce privacy-by-design with data minimization, PII tokenization/hashing, retention windows, and role-based access wired to audit trails. Prefer on-device or VPC inference when feasible; where sharing is needed, apply differential privacy, encrypted transport (mTLS), KMS-managed keys, and secret rotation. Isolate training and inference datasets, maintain data lineage, and enable DSR workflows for deletion and export requests. For rollout, choose integration paths that match team skills and SLAs, then wrap with policy engines, rate limits, and schema contracts so upgrades remain predictable.

| Integration path | Best for | Privacy posture | Notes |

|---|---|---|---|

| REST API | Quick pilots | mTLS + IP allowlists | Fast, vendor-managed |

| SDK | Feature teams | App-level secrets | Typed models, retries |

| Event bus | Async pipelines | Topic-level ACLs | Decoupled, scalable |

| DB plugin | RAG/search | Row/column masking | Vector + metadata |

| Edge runtime | Low latency | Local processing | Model quantization |

Platform Deep Dive Deployment workflows scalability cost control and governance

Deployment excellence begins with a lineage-aware pipeline that treats models like living products: code merges trigger containerized builds, automated evaluations gate promotion, and artifacts land in a signed model registry with immutable versioning. Progressive release patterns-blue/green, canary, and shadow traffic-pair with feature stores and policy-as-code to enforce reproducibility and risk controls from dev to edge devices. Telemetry loops close the gap between offline metrics and real-world behavior, enabling quick rollbacks and data-driven iteration without compromising security.

- Build & Verify: Reproducible containers, unit/contract tests, drift checks

- Promotion Gates: Bias audits, SLO conformance, signed artifacts

- Progressive Delivery: Canary, shadow, and rate-limited rollouts

- Observability: Traces, prompt/model logs, automated rollback hooks

Scalability hinges on adaptive concurrency and right-sized hardware pools: autoscaling based on latency SLOs, GPU sharing for batch/online blends, and serverless bursts for spiky inference. Cost control meets governance through quantization and distillation, spot/reserved mix, budget alerts, and chargeback reports aligned with RBAC, audit trails, lineage, and data retention policies. The result: reliable performance with transparent spend and compliance baked into every release.

| Env | Scaling | Cost Lever | Governance |

|---|---|---|---|

| Dev | 0-1 autoscale | Spot + sleep | RBAC + signed images |

| Staging | Canary 10% | Scheduled scale | Audit logs + policy checks |

| Prod | HPA + GPU pool | Reserved + quantized | Lineage + approvals |

Framework Choice Guide When to favor PyTorch JAX or TensorFlow for training and inference

Choosing the right deep learning framework hinges on your phase (explore vs. scale), hardware targets, and deployment pathway. If your team values fast iteration, rich community tutorials, and intuitive debugging, PyTorch feels natural-especially with torch.compile speeding up eager code, plus DDP/FSDP for multi-GPU training and export paths via ONNX/TensorRT. For algorithmic research and large-batch training on TPUs or high-end GPUs, JAX shines: its jit/pjit/pmap stack fuses and parallelizes code through XLA, yielding lean, predictable kernels-ideal for steady-state training and compiled inference. Need industrial-strength pipelines? TensorFlow’s Keras + TF-DF/TF Addons on the modeling side and TFX + SavedModel + TF Serving in production keep experiments reproducible and deployments standardized, while TF Lite and TF.js power edge and browser.

- Favor PyTorch when research velocity, flexible control flow, and GPU-first workflows dominate; deploy with TorchServe, ONNX Runtime, or Torch-TensorRT for low-latency inference.

- Favor JAX for scalable compute graphs and mathematically crisp transformations; compile once and run fast with XLA on TPU/GPU, often via Flax or Haiku for cleaner modeling.

- Favor TensorFlow for end-to-end ML ops: DistributionStrategies for training, TFX for pipelines, TF Serving for online inference, and TF Lite for mobile with quantization and hardware delegates.

| Scenario | Best Pick | Why | Extras |

|---|---|---|---|

| Rapid GPU prototyping | PyTorch | Dynamic, Pythonic | torch.compile boost |

| TPU-scale training | JAX | XLA + pjit | Flax ecosystem |

| Mobile/edge inference | TensorFlow | TF Lite maturity | NNAPI/Core ML |

| Mixed infra serving | ONNX Runtime | Portable EPs | Export from PT/TF |

| Enterprise pipelines | TensorFlow | TFX + Serving | A/B, monitoring |

Pragmatic tip: prototype where your team moves fastest, then harden toward your deployment targets. PyTorch pairs well with GPU servers and mixed-precision plus TensorRT; JAX rewards clean, functional code that compiles into high-performance kernels; TensorFlow reduces friction when you need auditors, schedulers, and fleet-grade serving. For portability, keep an eye on ONNX exports and quantization paths (PTQ/AWQ) to hit budgeted latency. Distributed choices differ-FSDP (PyTorch), pjit/GSPMD (JAX), and MultiWorkerMirroredStrategy (TensorFlow)-so align them with your cluster fabric and observability stack before scaling.

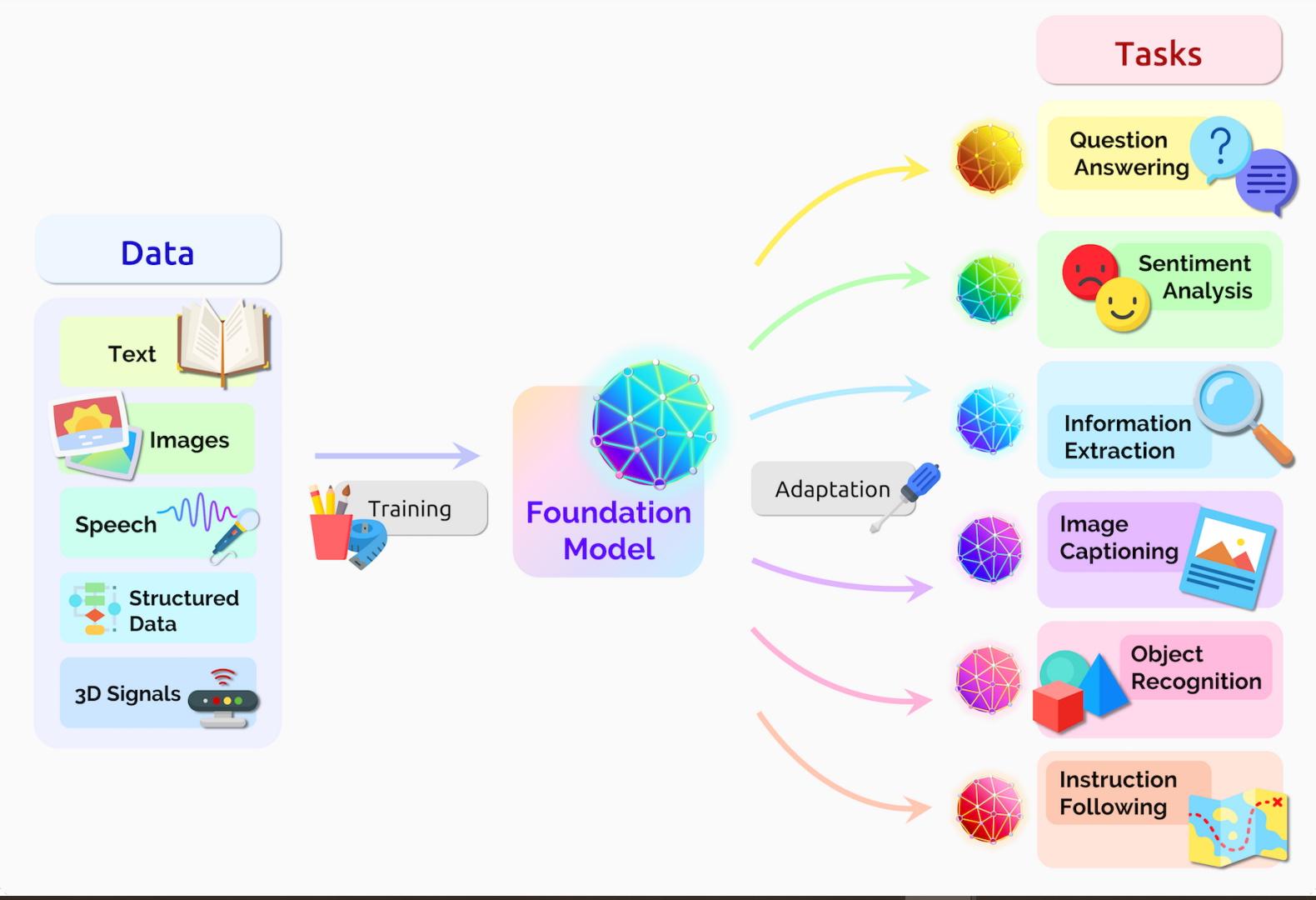

From Hype to Rollout Actionable steps for adopting foundation models retrieval augmented generation and autonomous agents

Turn proofs-of-concept into production wins by anchoring each initiative to a single business metric and a minimal, testable workflow. Start with a thin slice: one task, one audience, one data source. Decide early whether you need a pure foundation model, retrieval-augmented generation, or an agentic pattern, then shape controls around that choice-privacy, latency, cost, and failure modes. Build an evaluation harness you can run nightly; version prompts and datasets; and require human checkpoints where impact or risk is high. Treat the model as just one part of a system with observability, rollback, and budget guardrails baked in.

- Use-case triage: Rank by business value, data readiness, and risk; pick the smallest path to measurable ROI.

- Data fitness: Map sources, owners, and quality; add PII filtering, deduping, and freshness SLAs.

- Model route: Closed API for speed and safety; open weights for control and price; hybrid when needed.

- Controls first: DLP, role-based access, prompt hardening, content filters, and red-teaming scenarios.

- Eval loop: Automatic tests for correctness, grounding, toxicity, and regressions; track cost and latency.

- Shipping rules: Canaries, A/Bs, feature flags, token budgets, and clear fallbacks.

| Layer | Pick | Note |

|---|---|---|

| Foundation Model | API or Open Weights | Speed vs control |

| Embeddings | General or Domain | Match language/data |

| Vector Store | Managed or pgvector | Scale and cost |

| Orchestration | LangChain / SK | Composable flows |

| Guardrails | Policy + Filters | Safety and compliance |

| Observability | Tracing + Metrics | Debug and drift |

| Eval | RAG/Task Suites | Ground truth first |

| Hosting | Cloud or On-Prem | Data residency |

RAG thrives on disciplined retrieval: craft domain-aware chunking, balanced overlap, and metadata-rich indexing; cache frequent queries and add query rewriting to handle user variance. Measure the whole chain-recall@k, groundedness, citation coverage-not just final answers. For agents, start assistive (suggestions), evolve to semi-autonomous (execute within constraints), and only then orchestrate multi-agent flows. Give agents a curated tool catalog with typed inputs, sandboxed execution, explicit rate limits, and human approval for high-impact actions. Make handoffs visible, log every tool call, and prefer deterministic tools for critical steps.

- RAG checklist: Domain chunking, hybrid search (BM25 + vectors), reranking, freshness filters, and citation rendering.

- Agent checklist: Task decomposition, tool permissions, timeouts/retries, memory scopes, and staged approvals.

- KPIs: Task success rate, groundedness, first-token latency, unit cost per outcome, and escalation frequency.

- Go/No-Go gates: ≥X% factual grounding on eval set, ≤Y% unsafe outputs, stable p95 latency, and rollback validated.

- Scale-out: Create a central prompt/model registry, shared eval datasets, and a cross-team Enablement/CoE function.

To Wrap It Up

As this edition of Tech Spotlights wraps, remember that tools, platforms, frameworks, and breakthroughs are less a destination than a workbench. Each piece is useful in context, shaped by your data, constraints, and risk tolerance. The right fit isn’t the shiniest option but the one that aligns with your objectives and can be explained, measured, and maintained.

If you’re exploring what to adopt next, start small and design for learning: set clear success metrics, benchmark against baselines, check documentation and community health, and weigh costs, latency, privacy, and portability. Pay attention to licensing, governance, evaluation methods, and the quiet details of interoperability-they’re where long-term value tends to live.

This landscape moves fast: today’s novelty becomes tomorrow’s utility, while some bright ideas dim under real workloads. We’ll keep separating signal from noise, testing claims, and charting trade-offs so you can make informed, durable choices. Until the next spotlight, keep your stack adaptable and your assumptions testable.